WASHINGTON (CNS) — The fourth revolution — the information revolution — is here.

Think the self-driving car, robots that have mastered legged locomotion, and machines that recognize faces, respond to speech, translate between languages and even answer questions.

It’s all part of the rapidly emerging fields of artificial intelligence and machine learning. Engineers and scientists are engaged in increasingly well-funded research into ever more complex machines that can “think” on their own and accomplish tasks once believed only possible by humans.

[hotblock]

Humankind has encountered previous revolutions that have changed its thinking about its place in the universe: Copernicus’ heliocentric model of the solar system, Darwin’s theory of evolution, and Sigmund Freud’s research into the human psyche. Each had serious implications in their time.

The information revolution, however, also poses moral and ethical questions that humans are just beginning to raise in concert with the rapid developments in artificial intelligence, known as AI.

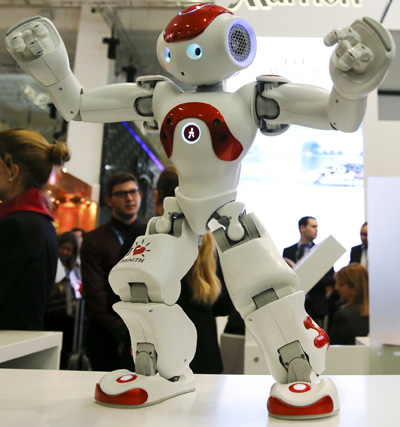

A Zora Bots humanoid robot dances at the Marriott exhibition stand on the International Tourism Trade Fair in Berlin March 9. (CNS photo/Fabrizio Bensch, Reuters)

While AI has not yet advanced to the point where robots are “super intelligent” and post a physical threat to the existence of humanity — as many works of science fiction have explored — people who think about such matters say the time has come for serious discussions to occur on what it means to be human in a world in which smart machines are becoming more prevalent.

“The biggest challenge that our development in AI and robotics presents to us is a challenge to our human exceptionalism,” said David J. Gunkel, professor of communication at Northern Illinois University and the author of “The Machine Question: Critical Perspectives on AI, Robots and Ethics.”

“We have always thought of ourselves, in the medieval way of thinking, as the top of the chain of being,” he explained to Catholic News Service. “We’re now on the verge of creating machines that push against or at least challenge the position we’ve given ourselves.”

[tower]

The questions are particularly meaningful in light of rapid computer advances. A concept dubbed as Moore’s law bears review. Gordon E. Moore, co-founder of Intel and Fairchild Semiconductor, observed in 1965 that the number of transistors in a dense integrated circuit doubles approximately every two years. Contemporary semiconductor developers estimate that the pace has slowed to about two and a half years. Given that understanding, it is estimated that by 2045 the world’s computing power will surpass the brainpower equivalent to that of all human brains combined.

Some of the questions revolve around human labor, both physical and intellectual, autonomous weapons systems, policing robots, control of critical infrastructure and the widening gap between people with access to technology and those without.

“The ethical focus shouldn’t be so much on the computer — to say this is a good or a bad thing — but more on the motivation, the plans, the understanding, the intentions of those who are making (the machines) and those who are funding (the research),” said Jesuit Father James Murphy, associate professor of philosophy at Loyola University Chicago.

Father Murphy posed a basic question to researchers: “Have you thought through the ethics of what you are doing?”

“If you are doing that work and don’t realize (the ethics involved), that you do it because it can be done, that’s unethical,” he said.

Advances in autonomous weapons systems is an example that points to the concerns raised by Father Murphy and other deep thinkers about AI ethics reached by CNS.

“People don’t appreciate how many partially or wholly autonomous weapons system are already deployed and in use around the world,” said Don Howard, professor of philosophy at the University of Notre Dame.

He cited the deployment by the South Korean military of machine-gun wielding robot sentries along the North Korea border that identify with heat and motion detectors potential targets more than two miles away. While it requires a human to fire the weapon, full autonomy is not a possibility to be ignored in Howard’s estimation.

“We need to think about this,” he said.

On another front, Howard said humanity must address the near-term challenge that smart robots are replacing human labor at an accelerating rate. It’s not just factory work where robots are beginning to replace, but even in service industries, including bars and restaurants, he said.

Significant progress has been made in developing machines that can provide basic care to the elderly. Other more skilled jobs being taken over by smart machines include television and film animation, drafting and design work, and journalism.

Low-wage earners will be most affected, according to the most recent Economic Report of the President released in February. It uses data from several sources, including the Bureau of Labor Statistics and business studies, to show that people earning up to $20 an hour were 260 percent more like to see their job automated than people earning $20 to $40 an hour and 2,700 percent more likely to see their job automated than people earning more than $40 an hour.

Howard, who teaches a class on robot ethics, urged society to keenly consider the importance of work as addressed by St. John Paul II in his encyclical “Laborem Exercens” (“On Human Work”). “Doing a job well is one of the most important sources of psychological well-being,” Howard said.

[hotblock2]

The responsibility for posing such questions falls to all of society rather than just those in scientific fields and the deep thinkers of academia, Shannon Vallor, associate professor of philosophy at Jesuit-run Santa Clara University, explained in an email to CNS.

She called for improved education about the risks and benefits of AI in engineering, software design and computer science programs, greater professional and industry emphasis on the ethical development of emerging technologies, and better informed legislators who are able to “intelligently and responsibly regulate high-risk AI applications.”

Vallor suggested that the times call for “broader cultural reflection on AI and human flourishing.”

“What large aims should AI serve? Whose interests? What kind of future should we want AI to help us build?” are among the questions she posed.

People of faith also can have a role in determining how AI evolves, Vallor suggested, inviting them to “envision and work toward a technological future that is compatible with universal justice, compassion, hope and wisdom.”

The questions though are not limited to the deep thinkers of academia. Entrepreneur Elon Musk and theoretical physicist Stephen Hawking have joined with other innovative thinkers in cautioning that rapid advances in artificial intelligence without some thought into what they mean for humanity could threaten human survival.

Think tanks such as the Institute for Ethics in Emerging Technologies and the Future of Humanity Institute at Oxford University have echoed those concerns. The faith community, including the Catholic Church, has left the subject largely untouched.

Gunkel said his students often believe that ethical and moral questions are better addressed in the future when machines are more advanced. “My response is, ‘No, we have to talk about it now. We have to decide in advance of the technology (what is moral and ethical). Once the technology is here, it’s too late,” he said.

He challenged the educational system to reintegrate the humanities into curricula so students — especially those seeking to enter science, technology, engineering or mathematics — have a well-rounded education and think about issues other than passing exams, going on to college and getting a job.

The key to the future of human-machine relations is to enter the realm of asking questions about the nature of the work in which person is involved, Father Murphy said, because it’s what humanity has been doing throughout its history.

“There is no fundamentally new ethical question about persons that the development of computers raises,” Father Murphy said. “It’s the same old questions, just in a different form or different guise.”

PREVIOUS: Denver archbishop leads 1,800 in procession around Planned Parenthood

NEXT: After 50 years, rumors of God’s death still greatly exaggerated

I read your article on AI in The Evangelist last week.

I wanted to get your opinion on: “A Christian Perspective on Artificial Intelligence” by these authors.

Proceedings of the 2015 Christian Engineering Conference, Seattle Pacific University, Seattle. WA, June 2015

Thanks

Donald Parsons MD PhD